JVM series: Contend annotation and false-sharing

Article directory

Introduction

Modern CPUs have their own cache structures in order to improve performance, and multi-core CPUs have introduced MESI as a protocol for synchronization between CPU caches in order to work normally at the same time. Although MESI is very good, it may also cause performance degradation when used inappropriately.

What is actually happening? Let's take a look together.

The origin of false-sharing

In order to improve the processing speed, the CPU introduces the concept of cache. Let's first look at a schematic diagram of the CPU cache:

The CPU cache is a temporary data exchange between the CPU and the memory. Its capacity is much smaller than that of the memory, but the exchange speed is much faster than that of the memory.

The reading of the CPU is actually the search process of the layers of caches. If all the caches are not found, it is the reading from the main memory.

In order to simplify and improve the processing efficiency of the cache and memory, the processing of the cache is based on the Cache Line (cache line).

Reads the size of one Cache Line into the cache at a time.

On mac systems, you can use sysctl machdep.cpu.cache.linessize to check the size of the cache line.

In linux systems, use getconf LEVEL1_DCACHE_LINESIZE to get the size of the cache line.

The size of the cache line in this machine is 64 bytes.

Consider the following object:

public class CacheLine {

public long a;

public long b;

}

Very simple object, through the previous article we can specify that the size of this CacheLine object should be 12 bytes of object header + 8 bytes of long + 8 bytes of long + 4 bytes of completion, the total should be 32 byte.

Since 32 bytes < 64 bytes, one cache line can include it.

Now the problem comes, if it is in a multi-threaded environment, thread1 accumulates a, and thread2 accumulates b. What will happen?

- In the first step, the newly created object is stored in the cache lines of CPU1 and CPU2.

- thread1 uses CPU1 to accumulate a in the object.

- According to the synchronization protocol MESI between CPU caches (this protocol is more complicated, and will not be explained here), because CPU1 has modified the cache line in the cache, the copy object of this cache line in CPU2 will be marked as I (Invalid) Invalid state.

- Thread2 uses CPU2 to accumulate b in the object. At this time, because the cache line in CPU2 has been marked as invalid, the data must be resynchronized from the main memory.

Please note that the time-consuming point is in step 4. Although a and b are two different longs, because they are contained in the same cache line, it eventually leads to the situation that the lock is sent even though the two threads do not share the same value object.

How to deal with it?

So how to solve this problem?

Before JDK7, we needed to use some empty fields for manual completion.

public class CacheLine {

public long actualValue;

public long p0, p1, p2, p3, p4, p5, p6, p7;

}

As above, we manually fill in some blank long fields, so that the real actualValue can monopolize a cache line, and there are no such problems.

But after JDK8, useless variables are automatically ignored during the compilation of java files, so the above method is invalid.

Fortunately, the sun.misc.Contended annotation was introduced in JDK8, and using this annotation will automatically complete fields for us.

Using JOL Analysis

Next, we use the JOL tool to analyze the difference between an object with Contended annotation and an object without Contended annotation.

@Test

public void useJol() {

log.info("{}", ClassLayout.parseClass(CacheLine.class).toPrintable());

log.info("{}", ClassLayout.parseInstance(new CacheLine()).toPrintable());

log.info("{}", ClassLayout.parseClass(CacheLinePadded.class).toPrintable());

log.info("{}", ClassLayout.parseInstance(new CacheLinePadded()).toPrintable());

}

Note that the -XX:-RestrictContended parameter needs to be added when using JOL to analyze Contended-annotated objects.

At the same time, you can set -XX:ContendedPaddingWidth to control the size of padding.

INFO com.flydean.CacheLineJOL - com.flydean.CacheLine object internals:

OFFSET SIZE TYPE DESCRIPTION VALUE

0 4 (object header) 05 00 00 00 (00000101 00000000 00000000 00000000) (5)

4 4 (object header) 00 00 00 00 (00000000 00000000 00000000 00000000) (0)

8 4 (object header) d0 29 17 00 (11010000 00101001 00010111 00000000) (1518032)

12 4 (alignment/padding gap)

16 8 long CacheLine.valueA 0

24 8 long CacheLine.valueB 0

Instance size: 32 bytes

Space losses: 4 bytes internal + 0 bytes external = 4 bytes total

INFO com.flydean.CacheLineJOL - com.flydean.CacheLinePadded object internals:

OFFSET SIZE TYPE DESCRIPTION VALUE

0 4 (object header) 05 00 00 00 (00000101 00000000 00000000 00000000) (5)

4 4 (object header) 00 00 00 00 (00000000 00000000 00000000 00000000) (0)

8 4 (object header) d2 5d 17 00 (11010010 01011101 00010111 00000000) (1531346)

12 4 (alignment/padding gap)

16 8 long CacheLinePadded.b 0

24 128 (alignment/padding gap)

152 8 long CacheLinePadded.a 0

Instance size: 160 bytes

Space losses: 132 bytes internal + 0 bytes external = 132 bytes total

We see that the object size using Contended is 160 bytes. 128 bytes are directly padded.

The problem of Contended in JDK9

sun.misc.Contended was introduced in JDK8 to solve the padding problem.

But please note that the Contended annotation is in the package sun.misc, which means that it is generally not recommended for us to use it directly.

Although it is not recommended for everyone to use it, it can still be used.

But if you are using JDK9-JDK14, you will find that sun.misc.Contended is gone!

Because JDK9 introduced JPMS (Java Platform Module System), its structure is completely different from JDK8.

After my research, I found that classes such as sun.misc.Contended, sun.misc.Unsafe, and sun.misc.Cleaner have been moved to jdk.internal.** and are not used externally by default.

Then someone has to ask, can we just change the package name of the reference?

import jdk.internal.vm.annotation.Contended;

Sorry it still doesn't work.

error: package jdk.internal.vm.annotation is not visible

@jdk.internal.vm.annotation.Contended

^

(package jdk.internal.vm.annotation is declared in module

java.base, which does not export it to the unnamed module)

Well, we found the problem, because our code does not define module, so it is a default "unnamed" module, we need to make the unnamed module visible in jdk.internal.vm.annotation in java.base.

To achieve this, we can add the following flag to javac:

--add-exports java.base/jdk.internal.vm.annotation=ALL-UNNAMED

Ok, now we can compile normally.

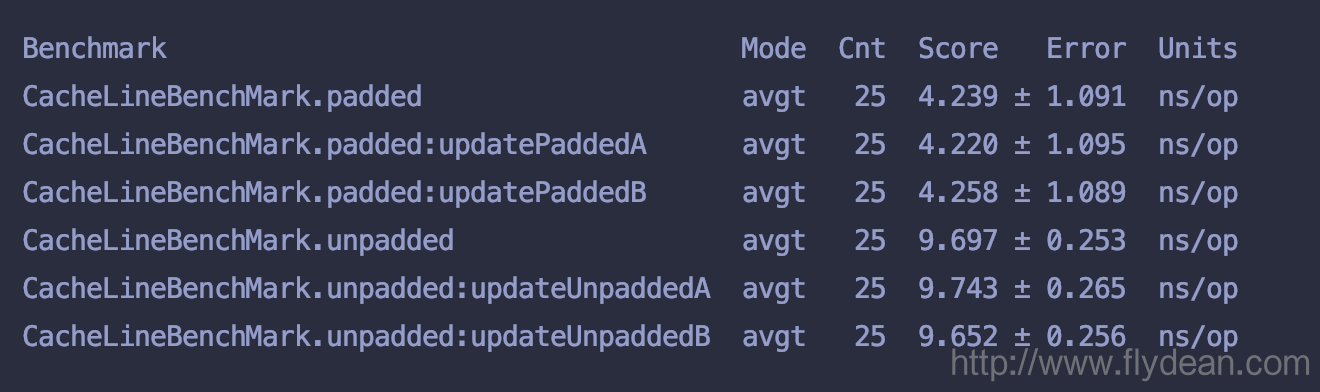

Comparison of padded and unpadded performance

Above we see that the size of the padded object is 160 bytes, while the size of the unpadded object is 32 bytes.

If the object is large, will the running speed be slow?

Practice is true, we use the JMH tool to test it in a multi-threaded environment:

@State(Scope.Benchmark)

@BenchmarkMode(Mode.AverageTime)

@OutputTimeUnit(TimeUnit.NANOSECONDS)

@Fork(value = 1, jvmArgsPrepend = "-XX:-RestrictContended")

@Warmup(iterations = 10)

@Measurement(iterations = 25)

@Threads(2)

public class CacheLineBenchMark {

private CacheLine cacheLine= new CacheLine();

private CacheLinePadded cacheLinePadded = new CacheLinePadded();

@Group("unpadded")

@GroupThreads(1)

@Benchmark

public long updateUnpaddedA() {

return cacheLine.a++;

}

@Group("unpadded")

@GroupThreads(1)

@Benchmark

public long updateUnpaddedB() {

return cacheLine.b++;

}

@Group("padded")

@GroupThreads(1)

@Benchmark

public long updatePaddedA() {

return cacheLinePadded.a++;

}

@Group("padded")

@GroupThreads(1)

@Benchmark

public long updatePaddedB() {

return cacheLinePadded.b++;

}

public static void main(String[] args) throws RunnerException {

Options opt = new OptionsBuilder()

.include(CacheLineBenchMark.class.getSimpleName())

.build();

new Runner(opt).run();

}

}

In the above JMH code, we use two threads to accumulate operations on A and B respectively, and see the final running result:

From the results, although the object generated by padded is relatively large, because A and B are in different cache lines, there will be no situation that different threads go to the main memory to fetch data, so the execution is faster.

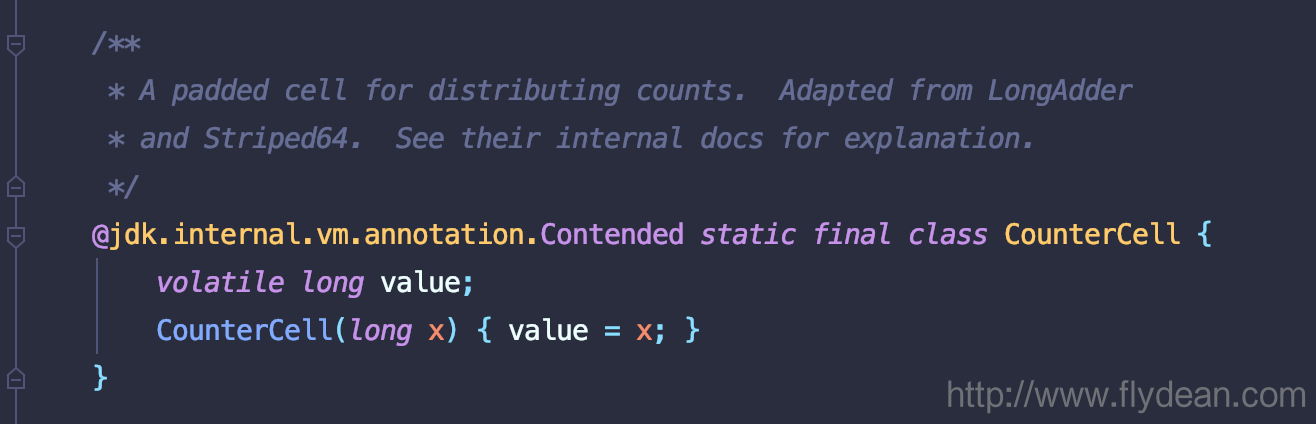

Use of Contended in JDK

In fact, the Contended annotation is also used in the JDK source code. It is not widely used, but it is very important.

For example, the use in Thread:

For example, the use in ConcurrentHashMap:

Other places used: Exchanger, ForkJoinPool, Striped64.

Interested friends can study it carefully.

Summarize

Contented from the initial sun.misc to the current jdk.internal.vm.annotation are all classes used internally by the JDK and are not recommended for use in applications.

This means that the method we used before is irregular, and although it can achieve results, it is not officially recommended. So do we have any formal way to solve the false-sharing problem?

Anyone who knows is welcome to leave a message to discuss with me!

The author of this article: those things about the flydean program

Link to this article: http://www.flydean.com/jvm-contend-false-sharing/

Source of this article: flydean's blog

Welcome to pay attention to my public number: those things about the program, more exciting are waiting for you!