False Sharing

content

The basic structure of a computer

Fourth, how to avoid false sharing

The cache system is stored in units of cache lines. When multiple threads modify variables that are independent of each other, if these variables share the same cache line, it will inadvertently affect each other's performance, which is false sharing.

The basic structure of a computer

The figure below is the basic structure of the calculation. L1, L2, and L3 represent the first-level cache, the second-level cache, and the third-level cache, respectively. The closer to the CPU cache, the faster the speed and the smaller the capacity. So the L1 cache is small but fast and next to the CPU core that is using it; L2 is bigger and slower and still only used by a single CPU core; L3 is bigger and slower and is used by a single CPU core Shared by all CPU cores on a socket; and finally main memory, shared by all CPU cores on all sockets.

When the CPU performs an operation, it first goes to L1 to find the required data, then to L2, and then to L3. If there is none of these caches at the end, the required data will go to the main memory to get it. The further you go, the longer the computation takes. So if you're doing something very frequent, you try to make sure the data is in the L1 cache. In addition, when a piece of data is shared between threads, one thread is required to write the data back to the main memory, while another thread accesses the corresponding data in the main memory.

Here is the concept of time to access different levels of data from the CPU:

| from CPU to | Approximate required CPU cycles | Approximate time needed |

|---|---|---|

| main memory | About 60-80ns | |

| QPI bus transfer (between sockets, not drawn) | about 20ns | |

| L3 cache | About 40-45 cycles | about 15ns |

| L2 cache | about 10 cycles | about 3ns |

| L1 cache | About 3-4 cycles | about 1ns |

| register | 1cycle |

Second, the cache line

Cache is composed of many cache lines. Each cache line is usually 64 bytes, and it effectively refers to a block of addresses in main memory. A Java long variable is 8 bytes, so 8 long variables can be stored in a cache line.

Every time the CPU pulls data from main memory, it stores adjacent data in the same cache line.

When accessing an array of longs, if one value in the array is loaded into the cache, it automatically loads the other 7. So it's very fast to traverse the array. In fact, arbitrary data structures allocated in contiguous blocks of memory can be traversed very quickly.

Example:

package com.thread.falsesharing;

/**

* @Author: 98050

* @Time: 2018-12-19 23:25

* @Feature: cache line特性

*/

public class CacheLineEffect {

private static long[][] result;

public static void main(String[] args) {

int row =1024 * 1024;

int col = 8;

result = new long[row][];

for (int i = 0; i < row; i++) {

result[i] = new long[col];

for (int j = 0; j < col; j++) {

result[i][j] = i+j;

}

}

long start = System.currentTimeMillis();

for (int i = 0; i < row; i++) {

for (int j = 0; j < col; j++) {

result[i][j] = 0;

}

}

System.out.println("使用cache line特性,循环时间:" + (System.currentTimeMillis() - start));

long start2 = System.currentTimeMillis();

for (int i = 0; i < col; i++) {

for (int j = 0; j < row; j++) {

result[j][i] = 1;

}

}

System.out.println("没有使用cache line特性,循环时间:" + (System.currentTimeMillis() - start2));

}

}

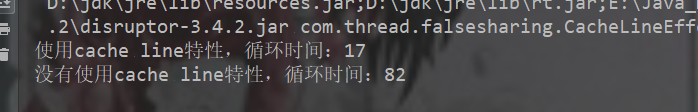

result:

3. Pseudo-sharing

As shown in the figure above, the variables x and y are placed in the first-level and second-level caches of the CPU at the same time. When thread 1 uses CPU1 to update the variable x, it will first modify the cache line where the first-level cache variable x of cpu1 is located. At this time, the cache is consistent The protocol will cause the cache line corresponding to variable x in cpu2 to be invalid, so when thread 2 writes variable x, it can only go to the second-level cache to find it, which destroys the first-level cache, and the first-level cache is faster than the second-level cache. . In the worst case, if the CPU has only one level of cache, it will lead to frequent direct access to main memory.

Example:

package com.thread.falsesharing;

/**

* @Author: 98050

* @Time: 2018-12-20 12:06

* @Feature: 伪共享

*/

public class FalseSharing implements Runnable {

/**

* 线程数

*/

public static int NUM_THREADS = 4;

/**

* 迭代次数

*/

public final static long ITERATIONS = 500L * 1000L * 1000L;

private final int arrayIndex;

private static VolatileLong[] longs;

public static long SUM_TIME = 0L;

public FalseSharing(final int arrayIndex) {

this.arrayIndex = arrayIndex;

}

public void run() {

long i = ITERATIONS + 1;

while (0 != --i){

longs[arrayIndex].value = i;

}

}

public final static class VolatileLong {

public volatile long value = 0L;

public long p1, p2, p3, p4, p5, p6; //缓存行填充

}

private static void runTest() throws InterruptedException {

Thread[] thread = new Thread[NUM_THREADS];

for (int i = 0; i < thread.length; i++) {

thread[i] = new Thread(new FalseSharing(i));

}

for (Thread t : thread){

t.start();

}

for (Thread t : thread){

t.join();

}

}

public static void main(String[] args) throws InterruptedException {

Thread.sleep(10000);

for (int i = 0; i < 10; i++) {

System.out.println(i);

if (args.length == 1){

NUM_THREADS = Integer.parseInt(args[0]);

}

longs = new VolatileLong[NUM_THREADS];

for (int j = 0; j < longs.length; j++) {

longs[j] = new VolatileLong();

}

final long start = System.nanoTime();

runTest();

final long end = System.nanoTime();

SUM_TIME += end - start;

}

System.out.println("平均耗时:" + SUM_TIME / 10);

}

}

Four threads modify the contents of different elements of an array. The type of the element is VolatileLong, with only one long member value and 6 unused long members. value is set to volatile to make changes to value visible to all threads. The program is executed in two cases, the first case is that the cache line filling is not masked, and the second case is that the cache line filling is masked. In order to "guarantee" the relative reliability of the data, the program takes the average time of 10 executions. The implementation is as follows:

Two programs with the same logic, the former takes about twice as long as the latter. Then at this time, let's use the theory of False Sharing to analyze the 4 elements of the former longs array. Since VolatileLong has only 1 long integer member, an array unit is 16 bytes (long data type). 8 bytes + 8 bytes of the object header of the bytecode of the class object), and then the entire array will be loaded into the same cache line (16*4 bytes), but there are 4 threads operating this cache line at the same time , so false sharing happens quietly.

False sharing occurs easily in multicore programming and is very stealthy. For example, ArrayBlockingQueue has three member variables:

- takeIndex: the index of the element to be taken

- putIndex: the index of the position where the element can be inserted

- count: the number of elements in the queue

These three variables can easily fit into a cache line, but there is not much correlation between modifications. Therefore, each modification will invalidate the previously cached data, so that the sharing effect cannot be fully achieved.

如上图所示,当生产者线程put一个元素到ArrayBlockingQueue时,putIndex会修改,从而导致消费者线程的缓存中的缓存行无效,需要从主存中重新读取。线程越多,核越多,对性能产生的负面效果就越大。

四、如何避免伪共享

缓存行填充

一条缓存行有 64 字节,而 Java 程序的对象头固定占 8 字节(32位系统)或 12 字节( 64 位系统默认开启压缩, 不开压缩为 16 字节),所以只需要填 6 个无用的长整型补上6*8=48字节,让不同的 VolatileLong 对象处于不同的缓存行,就避免了伪共享( 64 位系统超过缓存行的 64 字节也无所谓,只要保证不同线程不操作同一缓存行就可以)。

Java8中已经提供了官方的解决方案,Java8中新增了一个注解:@sun.misc.Contended。加上这个注解的类会自动补齐缓存行,需要注意的是此注解默认是无效的,需要在jvm启动时设置-XX:-RestrictContended才会生效。